In Architectural Issues While Scaling Web Applications, I pointed to caching as solution to some of the scaling issues, in this post I will cover different ways of caching and how caching can reduce load on web application and hence improve performance and scalability of web applications. I will cover Web application & database caching in next post.

Caching is the most common way to improve performance or scalability of an web application. In fact, effectiveness of caching is arguably main reason for developer confidence to ignore premature optimisation or to postpone fixing probable performance issue until detecting an issue. The underlying assumption for this confidence is (a) the performance optimisation is may not be required (b) if required, performance issue could be fixed by caching.

Effectiveness of caching in improving performance is due to the fact that most applications are read heavy and perform lot of repetitive operations. So application’s performance can be greatly improved by avoiding repeatedly performing resource intensive operation several times. An operation could be resource intensive due to IO activity such as accessing database/service or due to computation/calculations which are CPU intensive.

What is caching?

Caching is storing result of an operation which can be used later instead of repeating the operation again.

Cache hit ratio

Before executing an operation, a check is performed to determine if the operation result (requested data) is already available in cache. If requested data is available, it is called cache hit and if requested data is not available in cache, it is called cache miss. When cache miss occurs, the requested operation is executed and result is cached for future use (lazy cache population).

Cache hit ratio is the number of cache hits divided by total number of requests for an operation. For efficient utilisation of memory, cache hit ration should be high.

Cache hit ratio closer to 0 means most of the requests miss cache and the requested operation is executed every time. Low cache hit ratio could also lead to large cache memory usage as every cache miss could mean new addition to cache storage. Cache hit ratio closer to 1 means most of the requests have cache hits and requested operation is almost never executed.

Cache population

Cache could be populated lazily after the executing the operation very first time or by pre-populating cache at the start of application or by another process/background job. Lazy cache population is most common usage pattern but cache population by another background job can be effective when possible.

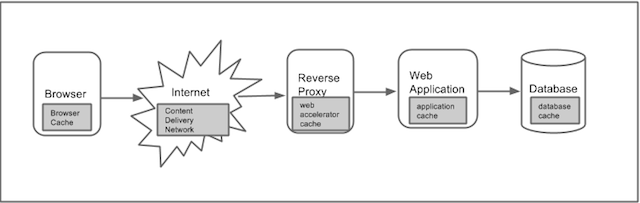

Caching Layers in Web Architecture

There are several layers in typical web application architecture where caching can be performed.

As shown in the above diagram, caching can be done right from browser to database layer of architecture. Let us walk through each layer of caching touching how and what could be cached at that layer and understand pros and cons of caching at each layer.

Few general points to note in the above diagram

- Caching at left most layer is better for latency.

- Caching at right most layers gives better control over granularity of caching and ways to clear or refreshing cache.

Browser Cache

Browser loads a web page by makes several requests to server for both dynamic resource and static resources like images, style sheets, java scripts etc. Since web application is used by user several times, most of the resources are repeatedly requested from server. Browser can store some of the resources in browser cache and subsequent requests load locally cached resource instead of making server request, reducing the load on web server.

Cache granularity

- Static file caching like images , style sheets, java scripts etc

- Browsers also provide local storage (HTML5) and cookies which can be used for caching dynamic data.

How to populate cache

- Browser caching works by setting HTTP header parameters like cache control headers of resource to be cached with time to live (TTL).

Regular Cache Refresh

- Browser makes server requests after TTL time period and refreshes browser cache.

Forced Cache Refresh

- Cache refresh can be forced by changing cache invalidation parameter in the URL of the resource. Cache invalidation can be

- a query parameter Eg. http://server.com/js/library.js?timestamp

- a version number in resource path URL Eg. http://server.com/v2/js/library.js

In Single Page Applications (SPA), the initial page is always loaded from server but all other dependents like images, stylesheets, java scripts etc. are loaded based on (query parameter or version number in URL). When a new resource or application is deployed to production, cache invalidation parameter is changed to refresh cache.

Pros

- User will experience best response time with no latency.

- Can cache both static files and dynamic data.

Cons

- Load on server is marginally reduced since repeated requests for the same resource from other machine still hit the server.

- Dynamic data stored in HTML5 local storage could be security risk depending on application even through the html6 local storage is accessible to application domain only.

Content Delivery Network(CDN)

Content Delivery Network is a large distributed system of servers deployed in multiple data centres across the Internet. The goal of a CDN is to serve content to end-users with high availability and high performance. CDNs serve a large fraction of the Internet content today, including web objects (text, graphics and scripts), downloadable objects (media files, software, documents), applications (e-commerce, portals), live streaming media, on-demand streaming media, and social networks. —from Wikipedia

Content Delivery Network (CDN) providers usually work with Internet Service Providers (ISP) and Telecom companies to add data centres at the last mile of internet connection. Browser request latency is reduced since servers handling requests to cached resource are close to browser client. Browsers usually hold limited number of connection to each host, if the number of requests to a host is more than browser connection limit, requests are queued. CDN caching provides another benefit of distributing resources over several named domains, hence handling more browser requests parallely provides better performance or response time to user. Only static resources are cached in CDN since TTL of resources is usually in the order of days and not seconds.

Cache granularity

- Static File caching like images , style sheets, java scripts etc

How to populate cache

- Static resources such as images, stylesheets, java scripts can be stored in CDN using CDN provided tool or API. CDN provides an url for each resource which can be used in web application.

Regular Cache Refresh

- CDN tool or API can be used to configure time to live and when to replace static resource on CDN network servers, but replication of CDN resource over distributed network takes time, hence can not be used for very short TTL dynamic resource.

Forced Cache Refresh

- Similar to browser cache refresh above, forced cache refresh can be achieved by introducing versioning in url to invalidate cache.

Pros

- User will experience good response time with very little latency since resource is returned from nearest server.

- Load on server is greatly reduced since all requests for the resource will be handle by CDN.

Cons

- Cost of using CDN.

- Cache refresh time takes time as it has to refresh on highly distributed network of servers all over the world.

- Cache static resources only, since cache refresh is not easy.

Reverse Proxy Cache

A reverse proxy server is similar to normal proxy server, both act as intermediary between browser client and web server. The main difference is normal proxy server is placed closer to client and reverse proxy server is placed closer to web server. Since requests and responses go through reverse proxy, reverse proxy can cache response to a url and use it to respond to subsequent requests without hitting the web application server. Dynamic resource caching is significant benefit of caching at reverse proxy level with very low TTLs (few seconds). Reverse proxy can cache data in memory or in external cache management tool like memcache, will discuss more on it later.

There are several reverse proxy servers to choose from. Varnish, Squid and Ngnix are some of the options.

Cache granularity

- Static file caching like images, style sheets, java scripts etc

- Dynamic page response of http requests.

How to populate cache

- Other then reverse proxy server configurations to cache certain resources, proxy servers caching works using HTTP header parameters like cache control, expires headers of resource to be cached with time to leave (TTL).

Regular Cache Refresh

- Reverse proxy servers provide ways to invalidate and refresh cache and they make server requests after TTL time period and to refresh cache.

Forced Cache Refresh

- Reverse proxy servers can invalidate existing cache on demand.

Pros

- Web server load is reduced significantly as multi machine requests can use cache compared to browser cache where only requests for single machine are cached

- Reverse proxy servers can cache both static and dynamic resources. Eg. Home page of a site which is same for all clients can be cached for few seconds at reverse proxy.

- Most reverse proxy servers act as static file servers as-well, so web application never gets request for static resources.

Cons

- User will experience latency since request has to hit distant reverse proxy to get response.

- Reverse proxy processing and caching is required, which means added hardware costs compared to CDN costs. But most reverse proxies can be used for multiple purposes -as load balancer, as static file servers as-well as caching layer, added cost of additional box should not be a problem.

Next part of this post will cover different ways of caching within web application.